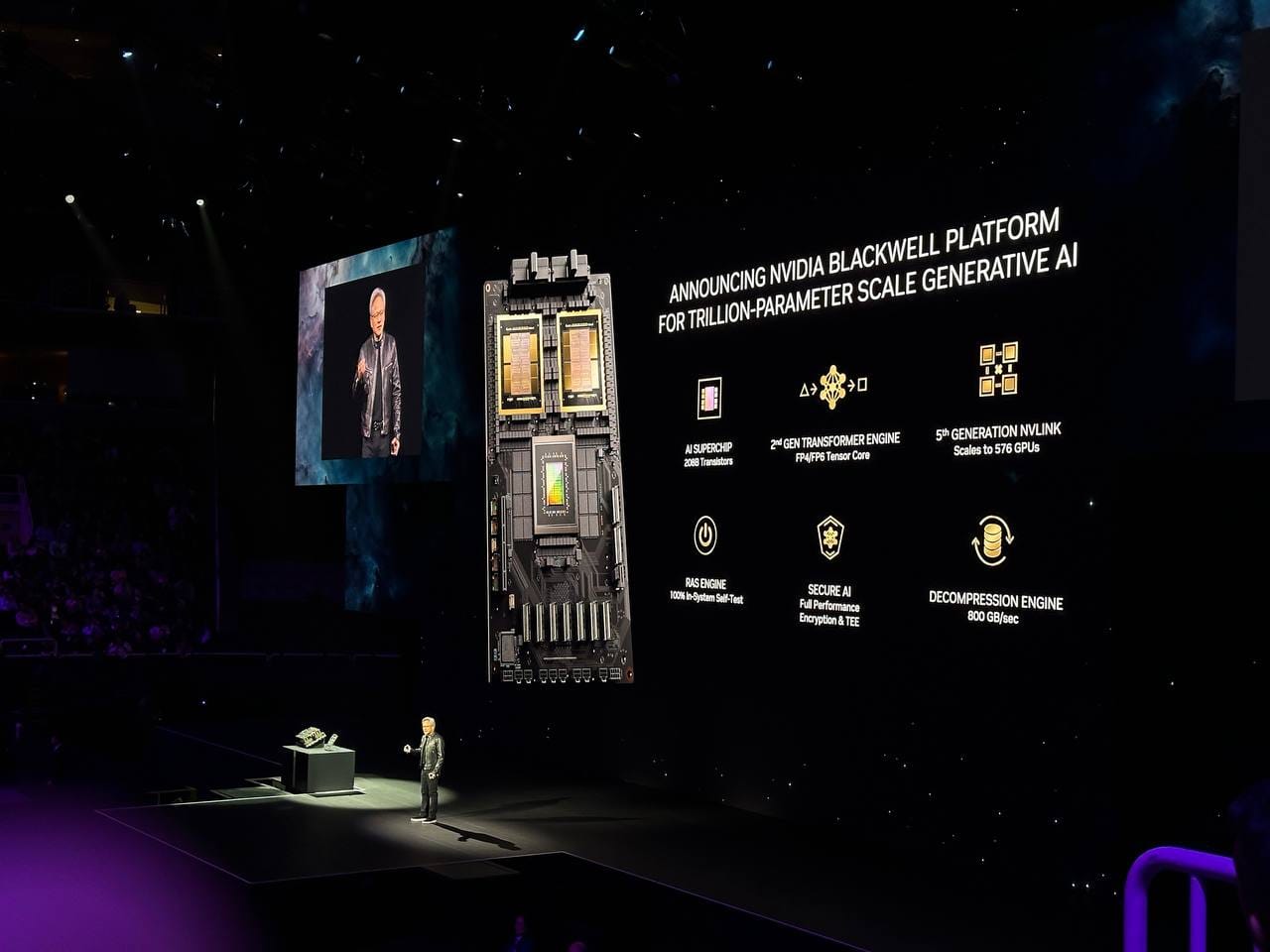

NVIDIA introduces the Blackwell architecture, a technology that will power "the new industrial revolution"

NVIDIA announced its new Blackwell architecture at the GTC keynote. Blackwell is the Hopper architecture successor, featuring a 30x performance increase and a 25x cost and energy consumption decrease when training or performing real-time inference for LLMs of up to 10 trillion parameters.

Honoring David Harold Blackwell —a mathematician and the first Black Scholar ever inducted into the National Academy of Sciences— NVIDIA's Blackwell architecture supersedes the NVIDIA Hopper architecture. Blackwell's accelerated computing technologies enable AI training and real-time inference for LLMs of up to 10 trillion parameters at a fraction of the energy consumption and costs required by its predecessor. These technological advances are expected to unlock breakthroughs in fields as diverse as data processing, computer-assisted drug design, and quantum computing. Many industry-leading organizations are expected to adopt the new Blackwell architecture, including Amazon Web Services, Dell, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI.

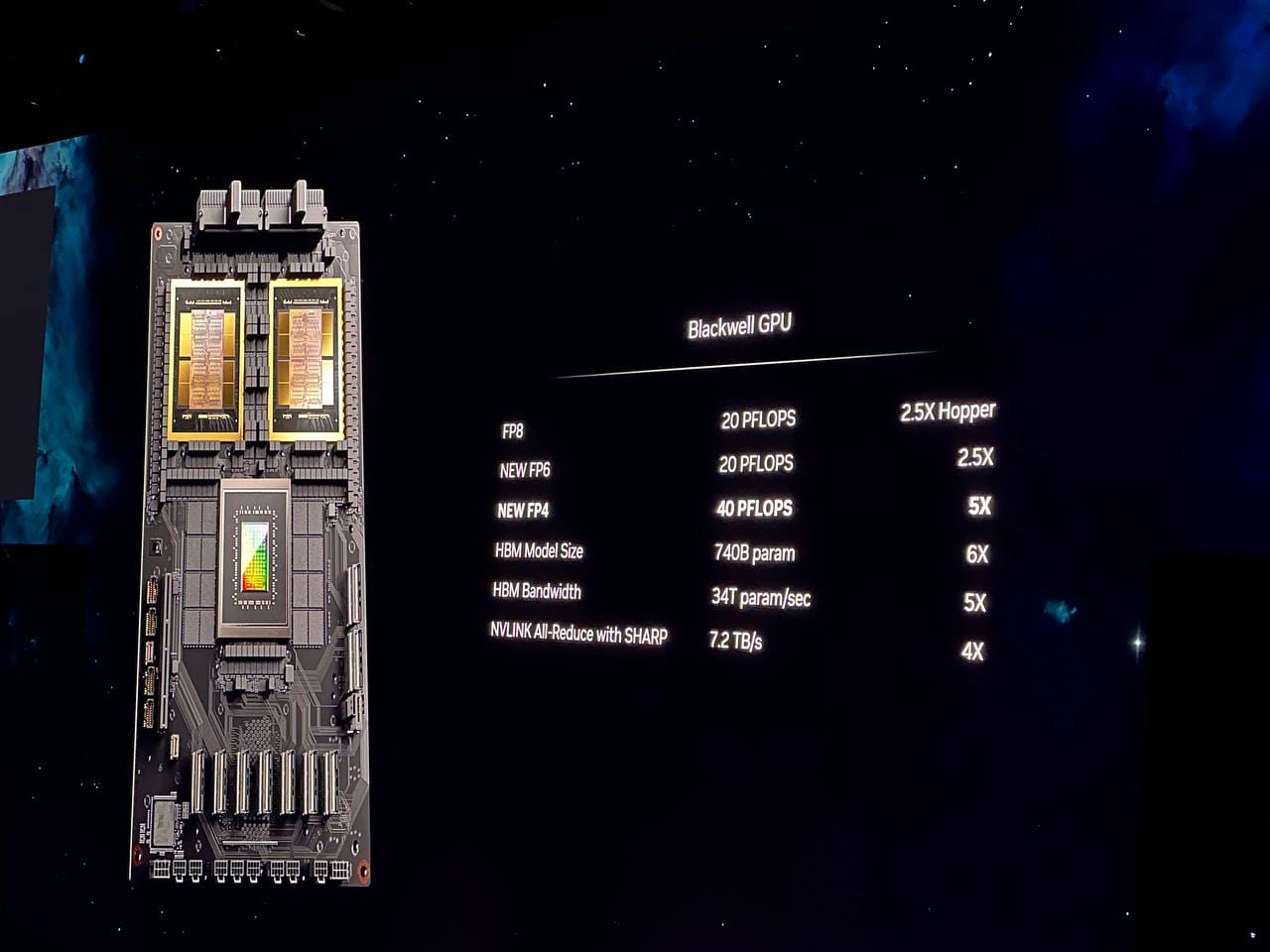

Blackwell architecture GPUs cram an impressive 208 billion transistors and are manufactured using a custom process. The NVIDIA TensorRT™-LLM and NeMo Megatron frameworks integrate micro-tensor scaling support and NVIDIA's dynamic range management algorithms to enable Blackwell to support double the compute and model sizes with its new 4-bit floating point AI inference capabilities. At the same time, the latest iteration of NVIDIA NVLink® provides 1.8TB/s bidirectional throughput per GPU, communicating up to 576 GPUs for the most complex LLMs. Blackwell-powered GPUs also feature dedicated RAS and decompression engines and advanced confidential computing capabilities that protect models and customer data without compromising performance.

The NVIDIA GB200 Grace Blackwell Superchip connects two of the H100 successor chips, the NVIDIA B200 Tensor Core GPUs, to an NVIDIA Grace CPU over a 900GB/s ultra-low-power NVLink chip-to-chip interconnect. For additional AI performance, the GB200 Superchips can be connected with the NVIDIA Quantum-X800 InfiniBand and Spectrum™-X800 Ethernet platforms to achieve networking speeds of up to 800Gb/s. The GB200 is also the main component in the massive NVIDIA GB200 NVL72, a rack-scale system combining 36 Grace Blackwell Superchips, for a total of 72 B200 GPUs and 36 Grace CPUs per system, and delivering an impressive 720 petaflops of AI training performance or 1,440 petaflops (aka 1.4 exaflops) of inference. The NVL72 provides a 30x performance increase and a 25x cost and energy consumption decrease when compared to the same number of NVIDIA H100 GPUs.

The NVIDIA Blackwell portfolio will be available from select NVIDIA partners and the NVIDIA DGX™ Cloud later this year and will be supported by the NVIDIA AI Enterprise software. More details on the Blackwell architecture announcement can be found on the NVIDIA GTC keynote.