This Week in AI: March 31 – April 6

Hailo secures additional funds and introduces Hailo-10 for edge devices; Luminance raised $40 million in Series B led by March Capital; The US and the UK signed into a partnership on AI safety; Stability AI launched Stable Audio 2.0; Google won big in the AI talent-luring battle; and more.

Every weekend, we bring together a selection of the week's most relevant headlines on AI. Each weekly selection covers startups, market trends, regulation, debates, technology, and other trending topics in the industry.

Hailo secures an additional $120M and introduces Hailo-10, an AI accelerator for edge devices

Hailo is a chipmaker specializing in AI chips for low-power devices such as personal electronics and car information and entertainment systems. The company recently announced the extension of its Series C funding round with an additional $120 million raised. The round was led by a selection of existing and new investors, which include the Zisapel family, Gil Agmon, Delek Motors, Alfred Akirov, DCLBA, Vasuki, OurCrowd, Talcar, Comasco, Automotive Equipment (AEV), and Poalim Equity. The Series C extension brings Hailo's total raised funds to $340 million.

Hailo has also introduced Hailo-10, a high-performance gen AI accelerator for edge devices that can run Llama2-7B with up to 10 tokens per second (TPS) at under 5W of power. Hailo-10 can also process Stable Diffusion 2.1 outputs in under 5 seconds per image with the same ultra-low power consumption. By enabling generative AI applications to run in edge devices such as consumer electronics and smart vehicles, Hailo-10 addresses privacy concerns, waives the need for network connectivity, and reduces reliance on power-hungry cloud data centers.

Luminance raised $40 million in Series B led by March Capital

Luminance's Series B funding round also garnered backing from National Grid Partners and other existing investors, including Slaughter and May. Luminance is an industry leader in 'legal-grade' AI, with its main offering being a specialist legal LLM that can automate the generation, negotiation, and analysis of legal documents, including contracts. Luminance already boasts 600 organizations in 70 countries across several industries, from global manufacturers and insurance companies to pharmaceutical giants. Luminance's AI has had some recent breakthroughs, including the full automation without human intervention of a contract negotiation during a 2023 live demonstration to the BBC. The AI was also used for the first time at London's Old Bailey, where it allowed the counsel to optimize the evidence-reviewing process, shortening it by four weeks. The raised funds will accelerate Luminance's global growth, especially in the US, where Luminance creates about one-third of its revenue.

AI-generated meeting summaries expert, Read AI, announced its successful Series A

Read AI's $21 million Series A was led by Goodwater Capital and Madrona Venture Group, with the latter an existing investor. The funding agreement also states that Goodwater Partner Coddy Johnson will join Madrona's Matt McIlwain and Read AI co-founder and CEO David Shim on Read AI's Board of Directors.

In parallel with the Series A announcement, Read AI is also launching "Readouts", generative AI summaries for email and messaging services such as Gmail, Outlook, Teams, and Slack. The "Readouts" capitalizes on the availability of the AI agents for meetings on Microsoft Teams, Google Meet, and Zoom, which experienced a 12x increase in monthly active users and a 15x increase in measured meetings in more than 40 countries in 2023. Read AI is available as a free service to all Read AI users. On average, Read AI summaries can condense around 50 emails or messages across 7 to 10 participants into a single topic. "Readouts" auto-update themselves throughout the day, and include functionalities such as prioritizing the latest updates, summarizing messages and threads, tracking assigned action items and most relevant questions with their answers, displaying updates in chronological order, offering overviews, and observing sources to the presented information. New Read AI users can access "Readouts" upon sign-up while existing users can access them via the available integrations.

The US and the UK have signed into a formal partnership on AI safety

The groundbreaking partnership will feature at least one joint testing exercise on a publicly accessible model, and both parties are considering taking part in personnel exchanges between their institutes. More generally, the countries plan to share essential information about AI models' risks and capabilities, in addition to the results of individually conducted technical research on AI safety and security. Both countries have also committed to developing similar AI-safety-promoting agreements with other countries. The US commerce secretary, Gina Raimondo, and Michelle Donelan, British technology secretary signed the memorandum of understanding outlining this partnership, which follows the commitments undertaken at the UK's first global AI Safety Summit in November 2023. Moreover, the partnership is also announced amid the rising concerns about generative AI, including fears that it will make jobs obsolete, upend elections, and potentially pose an existential risk to humanity.

Creatives are taking a stand against the irresponsible deployment of AI-powered media generation tools

“This assault on human creativity must be stopped,” states the letter recently endorsed a wide-ranging list that features over 200 musicians, including some industry-renowned names such as Billie Eilish, the Bob Marley estate, Elvis Costello, Greta Van Fleet, Imagine Dragons, Jon Bon Jovi, Kacey Musgraves, Katy Perry, Mac DeMarco, Miranda Lambert, Mumford & Sons, Nicki Minaj, Noah Kahan, Pearl Jam, Sheryl Crow, Zayn Malik and many others. The letter remarks what we already know: that when used irresponsibly, AI can hinder our right to privacy, identity, and the protection of intellectual property, and the non-consented use of musicians' work to train AI models can prevent artists from actually making a livelihood. Moreover, the current concerns only pile on the at best partially resolved problem of piracy: Spotify’s average streaming royalty rate is about $0.0038, an already unsatisfactory starting point for artists that now have to compete against convincing deepfakes and AI music generators.

The Artist Rights Alliance joins another similar open letter signed by over 15,000 writers last year, including James Patterson, Michael Chabon, Suzanne Collins, Roxane Gay, and others. The letter, addressed to the tech giants' CEOs, remarks on the lack of compensation in exchange for using authors' works to train massive LLMs. "Millions of copyrighted books, articles, essays, and poetry provide the ‘food’ for AI systems, endless meals for which there has been no bill," the letter articulates. Regardless, there is no indication that anyone in the model-training business is listening. Most major AI firms have enabled mechanisms so creatives can request the removal of their intellectual property so it is not used for model training purposes. However, it is far from clear that filling the paperwork out makes any difference. Regulators and lawmakers are barely figuring out whether copyright law protections should apply to data scraping and model training. Because of this, it may take some time until a semblance of a solution looms in the horizon.

OctoAI launched OctoStack, a production-ready generative AI inference stack to optimize local enterprise LLM serving

One of the latest talking points in the generative AI landscape is the famed 'data flywheel', where organizations can build a virtuous circle where model interaction data becomes training data for customized fine-tuning to deliver improved customer experiences and significantly augmenting businesses' ROI. Until recently, such an approach was only available to organizations wielding enough resources to resolve any outstanding issues. OctoStack is one of the products looking to cover a market open for solutions that let enterprises ensure that they maintain the required level of control over models and data, can experiment with and choose between the latest closed and open-source models through a unified API, and operate their generative AI stack without requiring deeply specialized talent.

According to OctoAI, OctoStack differentiates itself in initial benchmarking against alternatives by showing a 4x better resource allocation and a 50% reduction in operational costs. Additionally, OctoStack builds on OctoAI's early focus on inference, the OctoAI team's combined experience in the problem of inference optimization guarantees efficient performance, highly customizable models, and a reliable production stack. OctoStack is generally available for all customers on a per-request basis that begins with getting in touch with the Octo AI team to cover everything from seeing a live demo, going through the hardware requirements, and even learning about OctoAI's current promotion to accelerate the integration of open-source LLMs for current OpenAI models users.

Cloudflare's Workers AI lets developers deploy AI applications on Cloudflare's network directly from Hugging Face

The general availability of Workers AI makes Cloudflare the first serverless inference partner with a Hugging Face Hub integration that enables easy, fast, and affordable global model deployment not requiring infrastructure management or unused compute capacity payments. These features make Cloudflare an appealing solution for enterprises and organizations looking to unlock the value AI can bring to their current workloads. Cloudflare has deployed GPUs in over 150 cities worldwide, and each is aware of its total AI inference capacity. This translates into optimized request routing, which means that if a request is queued in the current city, it can be routed to a less busy location to optimize delivery times even when traffic is high. In parallel with Workers AI, Cloudflare's AI Gateway lets developers evaluate and route requests to the most suitable model and provider, working towards the possibility of creating and running fine-tuning jobs directly on the platform. Developers can choose between the 14 Hugging Face-hosted models curated and optimized for Workers AI to experience one-click instant deployments of models supporting text generation, embeddings, and sentence similarity.

Stability AI launched Stable Audio 2.0

Stable Audio 2.0 can generate 44.1 kHz stereo, up to three-minute-long tracks from a natural language prompt. Stable Audio 2.0 also features a novel audio-to-audio capability enabling users to upload an audio sample and combine it with text-based prompts to generate various fully produced sounds. Following the September 2023 release of Stable Audio 1.0, which went on to be named one of TIME’s Best Inventions of 2023, Stable Audio 2.0 expands the tools available to creators everywhere, enabling the production of coherent full-length tracks, melodies, and sound effects, among other audio types. The model is available on the Stable Audio website and will soon be part of the Stable Audio API. Stable Diffusion's terms of service forbid the use of audio uploads containing copyrighted material, and the company has partnered with Audible Magic to use its content recognition technology to prevent copyright infringement. Stable Audio 2.0 currently showcases its capabilities on Stable Radio, a 24/7 live stream of Stable Audio-generated content, now streaming on the Stable Audio YouTube channel.

SiftHub takes the next step in its journey with the closure of its seed funding round

SiftHub raised $5.5 million in a seed funding round led by Matrix Partners India and Blume Partners, with participation from Neon Fund and a selection of angel investors. The raised funds will accelerate SiftHub's platform designed to optimize the sales and presales workloads. SiftHub enhances teams' productivity by assisting them in the discovery of up-to-date information and providing useful insights grounded in the company knowledge without the need to give access to proprietary model vendors to sensitive information. SiftHub integrates with sales-specific and productivity apps to enable access to all enterprise information from a single interface. In short, the platform delivers "secure, private, access-controlled answers that trace back to its source." As SiftHub begins its journey, it is looking to grow its team, and currently, access to the platform can be requested via a waitlist.

Google won big in the AI talent-luring battle by hiring Open AI's former head of developer relations Logan Kilpatrick

Kilpatrick left OpenAI last month and announced recently that he would join Google as AI Studio product lead and Gemini API support. Logan Kilpatrick's hiring follows news that Mustafa Suleyman and about 70 Inflection AI employees would join Microsoft to create a new entity known as 'Microsoft AI', led by Suleyman, who will report directly to Microsoft CEO Satya Nadella. Google likely lured Kilpatrick thanks to the latter's strong relationships with developers. After Kilpatrick announced his departure from OpenAI, several developers came together at OpenAI's Developer Forum to thank him for his service. Google AI Studio lets developers create AI applications (or wrappers) on top of Gemini via API integration. In the same post announcing his new role at Google, Kilpatrick also stated his commitment to "make Google the best home for developers building with AI." News of Logan Kilpatrick's hiring by Google comes amid reports that tech giants have been going the extra mile to secure top talent. Reportedly, Mark Zuckerberg is writing personal emails to lure Google DeepMind AI researchers, and Sergey Brin got in touch with a Google employee to convince them of turning an OpenAI job offer down.

Higgsfield AI secured $8M in seed funding to democratize social media video creation

Higgsfield AI co-founder & CEO Alex Mashrabov recounts how his experience co-founding AI Factory and being appointed Head of Generative AI at Snap, where Mashrabov and his team developed several ML-powered augmented reality effects and MyAI, the second-largest consumer AI chatbot, provided the foundation required to begin his journey with Higgsfield AI. The company's first product is the Android and iOS-based mobile app Diffuse. The application enables users to create realistic-looking versions of themselves, creating videos using either a library of presets and a user-provided selfie or a combination of text prompts and reference media. Higgsfield AI plans to accelerate the development of its Diffusion app by using the raised funds to shift from a Higgsfield model preview to a version producing more controllable and realistic outputs. Higgsfield AI's seed funding round was led by Menlo Ventures and backed by Charge Ventures, Bitkraft Ventures, K5 Tokyo Black, AI Capital Partners (Alpha Intelligence Capital’s U.S.-based fund), DVC, additional funds, and several angel investors.

Sam Altman no longer controls OpenAI's Startup Fund

A filing with the US Securities and Exchange Commission confirms that OpenAI CEO Sam Altman no longer owns or controls the fund. The modification in the fund's structure comes after the fund's unusual structure attracted outside attention: Altman raised the fund from outside partners, including Microsoft, and made the investment decisions with OpenAI claiming that Altman had no financial interest in the fund despite being the owner. A spokesperson for OpenAI has publicly declared that the fund's initial structure was temporary and that the decision to transfer control to Ian Hathaway, an experienced investor who has overseen the OpenAI Startup Fund accelerator program, should clarify the matter.

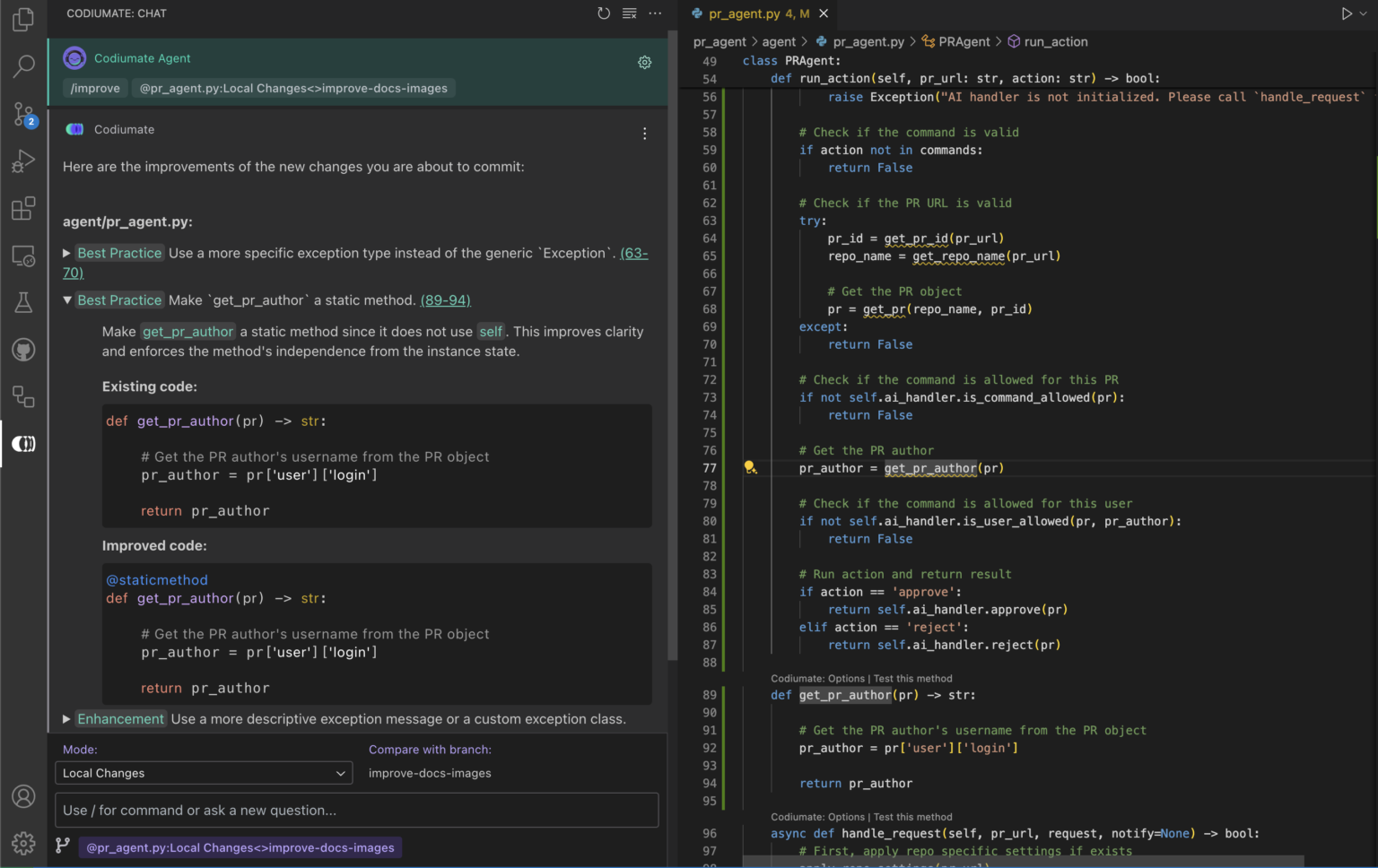

Codium AI launches Codiumate, a semi-autonomous agent that assists developers and teams in coding task planning

Codiumate is a coding agent that takes the context developers highlight because they consider it relevant to task completion as input. Then, developers enrich the highlighted context by providing a text-based prompt that describes their plan. In further optional revisions, developers can refine and detail their task plan. Once the plan is set, the second stage is implementation: developers open files in the order specified in the plan and use Codiumate's auto-complete function to realize the plan. Then, while compiling, developers can go back and review the progress or address issues as needed. Once most of the code is complete, Codiumate can provide feedback on the chat panel until the process is complete. The assistant can also identify duplicate code and draft documentation that developers can reference afterward. Codiumate deletes any collected code or information immediately after each session is ended, and the company complies with data privacy standards such as SOC 2. Codiumate will soon be capable of indexing the local repository automatically, so developers will not need to highlight the relevant context by hand. Codiumate is currently offered as part of an AI development agents library featuring a free subscription tier for individual developers, a $19 monthly subscription for small teams, and a custom-priced solution for enterprises.

The US and the EU discussed key topics on AI at the sixth ministerial meeting of the Trade and Technology Council (TTC)

The meeting took place in Leuven, Belgium, on April 4–5, and both parties have published a joint statement that details, among other things, the key outcomes of the meeting on the discussed topics. Regarding artificial intelligence, the EU and the US have committed to continued dialogue, and to advance the implementation of the TTC Joint Roadmap for Trustworthy AI and Risk Management to standardize the emerging AI governance and regulatory systems and enhance international cooperation. One of the first steps is publishing a list containing essential AI terms with jointly accepted definitions. In parallel, working groups staffed by agencies from both parties have made progress in defining critical milestones for deliverables to address global challenges such as extreme weather, energy, emergency response, reconstruction, healthcare, and agriculture. The statement also outlines the possibility of cooperating with partners in the UK, Canada, and Germany to accelerate assistance in Africa to democratize access to AI. The statement also specifies plans to create a Quantum Task Force to bridge gaps in the R&D efforts between parties, including developing unified benchmarks, identifying critical components, and advancing international standards. Other relevant AI-related issues include R&D for 6G wireless communication systems and semiconductors, and research cooperation standards for biotechnology and clean energy.

Resemble AI launched Rapid Voice Cloning

The technology allows users to create an AI-generated voice clone using samples as short as 10 seconds. Resemble AI uses machine learning models to analyze and replicate the characteristics that individuate the sampled voice to create a high-fidelity, ready-to-use voice clone. The Rapid Voice Cloning announcement compares Resemble AI's results using previously encountered voice samples with VALL-E and XTTS-v2, two state-of-the-art AI voice cloning models. One key differentiator for Resemble AI’s Rapid Voice Cloning technology is the accuracy with which it captures the nuances of individual accents, leading to generated voices that faithfully mimic the unique traits of each original voice. The technology also includes safeguards such as built-in consent and authorization mechanisms that help ensure users have the required permissions (including clear consent from the voice owner(s)) and agree to follow Resemble AI's terms of service before they can clone a voice sample.

Assembly AI introduced Universal-1, a multilingual speech-to-text model

Universal-1 is trained on over 12.5 million hours of multilingual audio to achieve swift speech-to-text accuracy in English, Spanish, French, and German, regardless of the background conditions. According to Assembly AI, Universal-1 features a 10% accuracy in speech-to-text accuracy when compared to the next-best commercially available system, reduces hallucinations by 30% over competitor Whisper Large-v3, has the preference of human evaluators over Conformer-2 (Assembly AI's previous model), and can transcribe multiple languages within a single file. Among other improvements, Universal-1 is also 13% more accurate in automatic timestamping than Conformer-2. Moreover, its effective parallelization capabilities enable low turnaround times for long audio files: Universal-1 takes 5x less time than a Whisper Large-v3 implementation on the same hardware. The Universal-1 model is available in English and Spanish only, with French and German coming soon. Universal-1 can be accessed via the Assembly AI API, or as one of the models leveraged in the Best automatic speech recognition (ASR) Tier, created for applications requiring the most accuracy. The Nano ASR tier is a lightweight, more affordable service for use cases such as search and topic detection.

SiMa.ai announced an additional $70M in raised funds and its next-gen ML system-on-a-chip

The additional investment was led by Maverick Capital, with participation from Point72 and Jericho, existing investors Amplify Partners, Dell Technologies Capital, Lip-Bu Tan, and others. SiMa.ai has raised $270 million to date, which is used to meet customer demand for the first-generation Machine Learning System-on-Chip (MLSoC) and to continue the development of the upcoming second-generation MLSoC, which has a Q1 2025 planned release date. SiMa.ai will unveil a unified software-centric platform for all edge AI in parallel with the second-generation MLSoC. Unlike the vision-centric first-generation MLSoC, the second generation will adapt to any framework, network, model, sensor, and modality to address every edge customer's AI needs in a unified software-centric system featuring an Arm Cortex-A CPU, Synopsys EV74 Embedded Vision Processors, and TSMC’s N6 technology.

OpenAI introduces new fine-tuning API features and announces the expansion of the Custom Models program

The new fine-tuning features follow the release of the GPT-3.5 self-serve fine-tuning API in August 2023. The newly introduced features include epoch-based checkpoint creation, a comparative Playground UI, support for third-party integrations, the ability to compute metrics over the entire validation dataset, hyperparameter configuration, and improvements to the fine-tuning dashboard.

The Custom Models program was announced on DevDay. The program is designed to train and optimize models for a specific domain with the collaboration of selected OpenAI researchers. The next step in the evolution of the Custom Models program is the assisted fine-tuning offering, with OpenAI technical teams leveraging additional customizing techniques to help organizations set up efficient training data pipelines, evaluation systems, and custom methods and parameters. The program also enables customers to partner with OpenAI to create custom-trained models from scratch. A recommended starting point for assisted fine-tuning is the fine-tuning API documentation. On the other hand, customers interested in the Custom Models program can contact OpenAI.

S&P Global launched the S&P AI Benchmarks by Kensho to assess LLM performance in business and finance

The benchmarking results will be displayed on a scoreboard to provide existing and prospective customers with a transparent and overarching view of how the different LLMs perform within industry contexts. The S&P AI Benchmarks by Kensho evaluate model performance when solving quantitative reasoning questions, and the models' capabilities to understand financial concepts and extract key financial information from documents to provide standardized assessments of LLM performance geared towards the finance industry. The S&P AI Benchmarks by Kensho encode a rigorous validation process developed by a team of specialists including academics, researchers, subject matter experts, and financial professionals from S&P Global's divisions. The process does not require contributors to share any part of their model, since the submission process is centered on the models' outputs. Once a contributor submits their model outputs, their score is created and displayed on the scoreboard. Users can request the removal of their scores at any time.

Cohere introduced Command R+ and announced a new collaboration with Microsoft Azure

Cohere has followed the launch of the Command R model with Command R+, a scalable model thought for real-world enterprise use cases. Command R+ features a 128K-token context window and offers hallucination-reducing retrieval augmented generation (RAG) with citations, 10-language coverage, and business process automation capabilities. According to Cohere, the model outperforms similar offerings in the scalable market category and has a performance that competes with more expensive models in essential business-centered tasks. Command R+ improves on Command R's advanced RAG capabilities, improving accuracy and adding in-line citations as a mechanism to avoid hallucinations so enterprise users can quickly access the most relevant information to the task at hand. Moreover, Command R+ can automate business workflows by combining multiple tools over several steps to accomplish complex tasks that would otherwise be impossible to perform. Finally, the model's command of business English, French, Spanish, Italian, German, Portuguese, Japanese, Korean, Arabic, and Chinese empowers Command R+ to assist geographically diverse organizations. As part of Cohere's new collaboration with Microsoft Azure, Command R+ will be available first on the Azure platform, followed by availability on the Oracle Cloud Infrastructure (OCI) and other cloud providers in the following weeks. Starting April 4, Command R+ is available on Cohere's hosted API. More information on availability, pricing, and privacy and security policies can be found here.

DataStax announced it has acquired Langflow

Langflow integrates with the AI programming stack to mitigate the learning curve associated with familiarizing oneself with the individual elements in the stack (i.e. LLMs, vector databases, and frameworks like LangChain. Thanks to its interface featuring key capabilities such as rapid experimentation, testing and iteration, and ease of use, Langflow's community has become one of the fastest growing within the open-source ecosystem. A working example showcased in the announcement highlights how easy it is to build on LangChain using Langflow, so much so, that it is likened to a visual way of building AI applications using the framework. In the announcement, DataStax also publicizes its commitment to preserving the open-source nature of Langflow, so it can continue to be powered by its user and contributor community.

Meta continues to favor AI-generated content labeling over removal

Following the criticism from its Oversight Board, Meta has announced that, starting next month, it will label a wider range of AI-generated content, including deep fakes, with a "Made with AI" identifier. Additionally, content that is determined to be manipulated in ways likely to deceive the intended audience on an important issue may be appended with relevant contextual information. However, Meta's labeling strategy will apply only when the generated media contains industry-standard indicators, or when users disclose that the content was generated using AI when they upload it. As a result, content that does not satisfy any of these two conditions will pass through Meta's filters unlabeled. The strategy favoring labeling over removal may also invite more AI-generated content uploads, seeing that the uploads will have better chances to remain on the platform unmarked. The changes to the labeling policy may be partly due to pressure from regulators and lawmakers, especially those from the European Union, who have been urging social media platforms and other industry leaders to watermark deep fakes and other AI-generated content when possible.

Microsoft warns that China intends to use AI to disrupt the US, South Korea and India elections

According to the Microsoft Threat Analysis Center report on East Asia, China has already performed a dry run in Taiwan, where it attempted a disinformation campaign featuring fake audio of Foxconn owner and former election candidate Terry Gou endorsing another presidential candidate even though Gou never made such a statement. The suspected AI-generated content was briefly available on YouTube. The group that Microsoft associates with this attack is also responsible for a series of AI-generated memes of presidential candidate William Lai and other Taiwanese officials. Other reported findings include

- social media accounts managed by actors affiliated with the Chinese Communist Party (CCP) posing questions meant to unearth the issues currently dividing US voters;

- increased use of Chinese AI-generated topics that aim at sowing division on events such as the Kentucky train derailment and Maui wildfires in November and August 2023, respectively, the management of nuclear wastewater in Japan, and drug use, immigration policies, and racial tensions within the US.

- more generally, the report concludes that although China's geopolitical priorities appear unchanged, the country has undoubtedly increased the amount and sophistication of its influence operations attacks on the South Pacific islands, the South China Sea region, and the US defense industrial base.

In addition to the operations Microsoft attributes to China, the report also details that North Korea continues to conduct cryptocurrency fund theft, software supply-chain attacks, and targeting of national security adversaries. The identified aim is to generate revenue that will most likely be used to fund the North Korean weapons program and collect intelligence on long-time perceived adversaries including the US, South Korea, and Japan. The UN has estimated that North Korean actors have stolen over $3B in cryptocurrency since 2017, with an amount ranging between $600 million and $1 billion collected in heists performed during 2023. Microsoft and OpenAI have identified a North Korean threat actor as Emerald Sleet. The latter has been using LLM-powered tools to optimize its operations. Microsoft and OpenAI have partnered to disable accounts and assets associated with Emerald Sleet.

Microsoft and OpenAI have projected a $100 billion data center

The $100 billion massive data center will reportedly include an AI supercomputer codenamed "Stargate", set to launch in 2028. Microsoft is expected to finance the project, which is estimated to be much more expensive than the biggest current data centers. Moreover, the US-based "Stargate" is projected as the last stage in a five-stage plan to build a series of supercomputers in an increasing power order. According to the reports, Microsoft is already working on a fourth-stage supercomputer with a 2026 release date. As expected, one of the weightiest issues has been the acquisition of enough AI chips to power the whole project. As a reference, the latest Blackwell B200 AI chips are expected to be priced between $30,000 and $40,000. Regardless, the project is meant to work with chips from different providers, perhaps including Microsoft's custom-designed AI chips introduced last November.

Credits from the AWS Activate program can now be redeemed for third-party model access

The AWS Activate program supports startup founders in their process and includes up to $100,000 in AWS credits to cover the costs associated with deploying the AWS fully featured services. Now, to further ease the path for founders to build using the latest AI technologies, Amazon is making AWS Activate credits redeemable for third-party model access via Amazon Bedrock, enabling startup founders to profit from the fully managed service to access foundation models from leading companies, including AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, and Stability AI. Access to the service without upfront cost should help startups experiment and evaluate to find the foundation model that best matches their goals and use cases. The decision to expand AWS Activate credits to Amazon Bedrock is partly due to Amazon's collaboration with Y Combinator. AWS has prepared a benefits package for the latest Y Combinator cohort, including $500,000 in AWS credits that can be used for access to the AWS Trainium and Inferentia chips, the reserved capacity of up to 512 NVIDIA H100 GPUs, and thanks to the Amazon Bedrock expansion, the third-party foundation models hosted in the platform. Every startup is eligible to register at AWS Activate to start applying for benefits including up to $100,000 in AWS credits.